Towards the end of our field season in 2022, my colleague and I began searching for an application to add annotations to our extensive video footage. After exploring various options, we concluded that DashWare, an open-source software, was the best tool* for our needs. This blog aims to explain how to use DashWare and share my insights on its application in our conservation work.

Our work extensively relies on the use of off-the-shelf drones for field operations. In addition to capturing photos and videos, drones collect vital data such as distance from launch, geographical coordinates, flight time, battery status, and more. This information is indispensable for research and gaining a better understanding of wildlife and ecosystems.

However, once we retrieve the video footage from the devices, this valuable flight data is missing visually in the video playback. Not all drones generate subtitle files, and even when they do, these subtitles are often presented as plain text in the media player. Unfortunately, this text-based representation does not effectively convey the data we collect during these flights alongside the aerial footage. This led us to search for an application that could help us annotate our aerial footage. We had specific criteria in mind: the tool had to be open-source, user-friendly, and require minimal setup and learning.

Using open-source software like Dashware can also be a valuable tool for conservationists who use drones to monitor wildlife and their natural habitats. It allows the addition of telemetry data to drone videos, providing context on flight path and animal behaviour, complementing traditional field practices. Dashware helps synchronise the telemetry data with the video footage, allowing users to display the data in real-time as the video plays. In addition to displaying data such as speed, altitude, and GPS coordinates, it offers customization options like font, colour, position of text, and data points selection.

(A) GETTING STARTED:

Installing DashWare

The open-source application can be accessed for free at the Dashware website: http://www.dashware.net/dashware-download/ or elsewhere from the web.

Video Processing

There is a known issue with DashWare 1.9.1 caused by source videos without an audio track which prevents a useful export of the final output. DJI drones cannot record sounds without an external sensor set for this purpose. This can be relatively easily resolved by adding an audio track to the video. We used DaVinci Resolve to add a free audio file to our video footage.

File Format

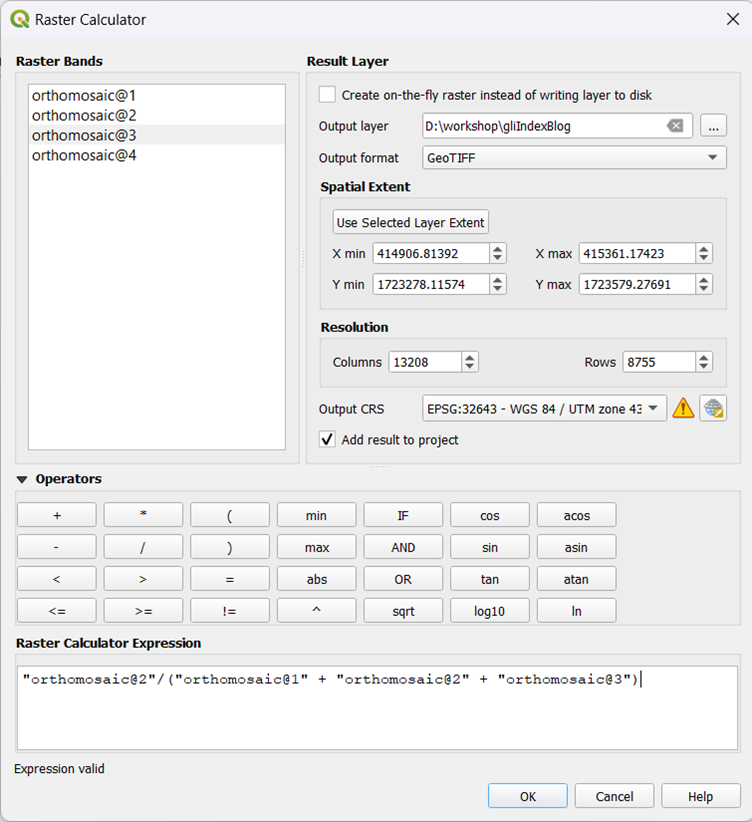

Overlaying telemetry data from an UAV onto a video can be done by using a CSV file. Acquire the CSV file by exporting the data from the UAV's flight controller or other telemetry source.

Edit the CSV to match the start of the video footage by checking its properties. Open the CSV file using spreadsheet software and navigate to the column labelled 'is Video.' Select all the rows before the value 1 that is 0 in the 'is Video' column, and delete those rows.

Screengrab of a CSV file with 'isVideo' column highlighted.

(B) DASHWARE PROJECT:

Launch DashWare from the start menu or desktop shortcut.

Click the ‘File’ button in the top left corner of the main window.

Select ‘New Project’ and enter a name for your project in the ‘Project Name’ field.

In the same dialog box select ‘<None>’

Click on ‘Ok’ to save these changes.

DashWare will open the new project and display the main window.

Click on the ‘+ (Plus)’ icon next to the ‘Video’ title in the tab. Browse and select the pre-processed video.04

Click on the ‘+ (Plus)’ icon next to this title in the tab, to view the ‘Add Data File’ dialog box. Click on the ‘Browse’ button under the title ‘Data logger file’, locate and select the .csv file containing all of the data from the selected drone flight video.

Click on the downward arrow under the title ‘Choose a data profile’ and select ‘Flytrex’. Click on the ‘Add’ button to confirm choices.

When one starts a new project in Dashware, the main window displays a blank video screen on the LHS with the Dashware logo and some gauges, while the RHS displays the primary workspace.

The ‘Project’ tab is the central location for managing and configuring any project. It includes several sub-tabs that allow access to different types of information and settings related to the project.

Click on the ‘+ (Plus)’ icon next to the ‘Video’ title in the tab. Browse and select the pre-processed video.04

Click on the ‘+ (Plus)’ icon next to this title in the tab, to view the ‘Add Data File’ dialog box. Click on the ‘Browse’ button under the title ‘Data logger file’, locate and select the .csv file containing all of the data from the selected drone flight video.

Click on the downward arrow under the title ‘Choose a data profile’ and select ‘Flytrex’. Click on the ‘Add’ button to confirm choices.

Add pre-processed .csv and .mp4 files using the data logger.

(C) PROJECT GAUGES:

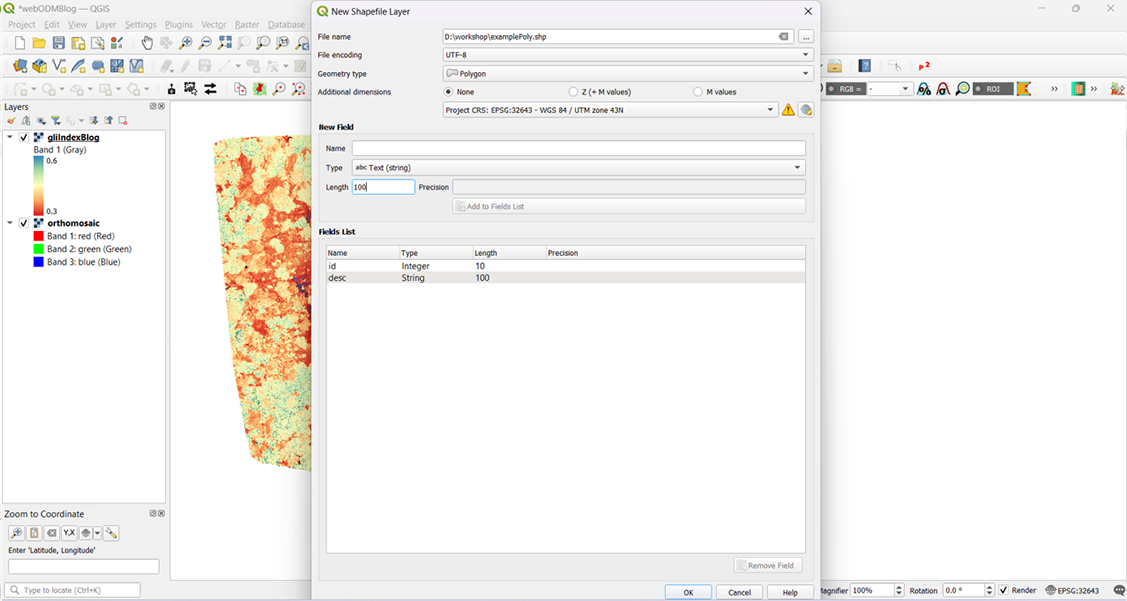

In DashWare, project gauges are graphical elements that overlay data onto videos. They can display various types of information, such as GPS coordinates or telemetry data. The Gauge Toolbox is a feature that allows one to add, create, and customize these gauges in a project. It also provides tools and options to design and adjust the appearance of data overlays.

The 'Filter' selection in the Gauge Toolbox is useful for searching and narrowing down to a specific gauge. One can also start listing the required attributes, such as speed, altitude, distance from launch, sea level, etc.

Gauge Toolbox search for the keyword 'altitude'.

Using the Gauge Toolbox, select the relevant gauges that correspond to these attributes. Make sure that each gauge is set to the same metric system as the data in the CSV file, such as kilometers per hour for speed or meters for altitude.

After selecting the appropriate gauges, add them to the project by pressing the gauge button or by clicking and dragging them onto the video screen. The gauge can then be further adjusted by clicking and dragging it to the preferred location.Here's the revised version with grammar corrections:

Gauges can also be modified as needed, such as this compact DashWare gauge above that displays speed, sea level, altitude, vertical speed, heading, takeoff distance, and traveled distance. To learn more about modifying and creating gauges, read the following blog. Modified gauges for displaying telemetry on UAV footage can be downloaded from our satellite drone imagery workflow page.

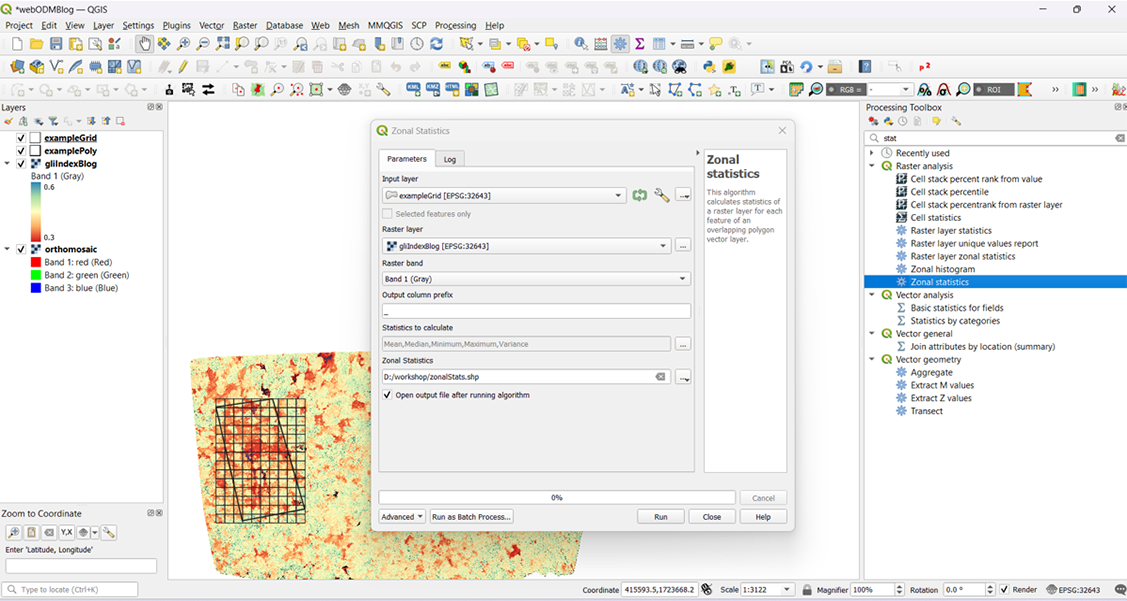

(D) DATA SYNCHRONISATION:

Play the video in the workspace to cross-check if the data is in sync with the video footage. In case, the gauge overlay is not in sync with the video footage, cross-check the following information:

Check the unit system to the units in the flight log.

The values do not automatically convert to the gauge selected. Therefore it is pertinent to choose gauges with the unit system as in the flight log.

Check if the gauge has a data value mapped to it correspondingly.

(a) To do so, change tabs to ‘Project’ in the primary workspace in Dashware.

(b) Locate the gauge in the ‘Project Gauges’ sub-tab and double click on the gauge name.

(c) An editable dialogue box title ‘Gauge Input Mapper’ will be displayed, with two input options. (i) Data File and (ii) Data Value.

(d) Review both data fields for appropriate entry, in case of modification use the downward arrow to select appropriate value from the list. Click on the ‘OK’ button to save changes.

Gauge Input Mapper with input options: (i) Data File and (ii) Data Value.

To sync the footage with the CSV file, navigate to the ‘Synchronization’ tab.

Uncheck the ‘Sync with video’ option in (b.) the bottom right-hand corner.

Then, drag the pointer to the start (c.) of the synchronization map.

Next, click and drag the video player to the start of the (a.) timeline at 0:00:000.

Once this done, re-check the ‘Sync with video’ option in (b.)

To synchronize the footage with the CSV file, use the 'Synchronization' tab.

E. Export Project

For the final step of the project, once all attributes and data have been mapped, we can export the file.

Click the 'File' button in the top left corner of the main window.

Select 'Create Video' and use the 'Browse' button to choose the location for the final file.

Uncheck 'Auto' near the Quality option in export to manually choose the quality of the export. Once decided, click on 'Create Video,' and the export will be ready shortly.

'Create Video' dialogue box.

In conclusion, DashWare has proven to be a valuable tool for integrating telemetry data with aerial footage in our conservation work. By allowing us to annotate videos with critical flight information, it enhances our ability to analyse and present data collected during drone missions. We hope this guide helps other conservationists and drone enthusiasts streamline their video processing workflows, making it easier to visualise and share the insights gathered from their aerial surveys.

*Note: This a tutorial for using dashware based on my experience on adapting footage from for DJI Mini/Mavic video footage.