We use imagery from UAVs to calculate the heights and volume of individual mangrove trees, and can use this information to calculate the above-ground carbon stock within a given area.

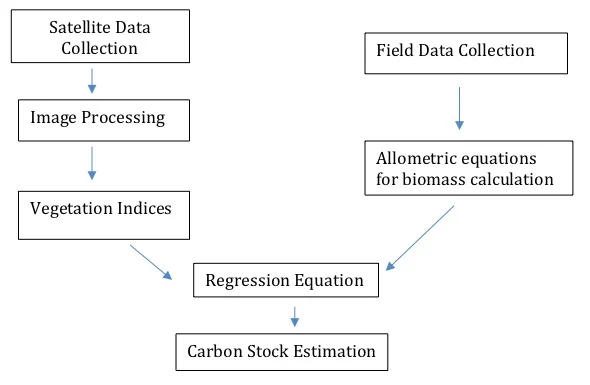

While quantifying the actual value of nature may not always be possible or even desirable, the scientific community has been developing methods to quantify ecosystem services to bolster the case for the conservation of those ecosystems for a while. In our previous blogposts, we summarised methods used to estimate ecosystem carbon based on satellite imagery and field work. In this blogpost, the last in this series (for now), we examine potential methods of estimating carbon using Unmanned Aerial Vehicles (UAV). With UAVs rapidly becoming more accessible, it is important for us to understand how they could be used to provide ecosystem carbon estimates that are more accurate and precise than those derived solely from satellite data.

We examine two broad approaches to assessing carbon using UAVs. The first is based on volumetric assessments of vegetation using UAVs and the second is an attempt to use UAV-based imagery to mimic LiDAR-based methods for carbon estimation. We found several studies (Messinger, 2016; Shin, 2018; Mlambo, 2017) that demonstrated the use of UAVs for the assessment of vegetation biomass; while none of the articles we found subsequently estimated carbon, we think its possible to adapt this method for carbon estimation as well.

A recent study by Warfield and Leon (2019) is a prime example of the first approach, of assessing vegetation volumes using UAVs. They recently conducted a comparative analysis of UAV imagery and Terrestrial Laser Scanning (TLS) to capture the forest structure and volume of three mangrove sites. The data obtained from the UAV and TLS surveys were processed to make point clouds to create 3D models from which the volume of the mangrove forest was estimated. A canopy height model (CHM) was created by subtracting a digital terrain model (DTM) from a digital surface model (DSM). This approach normalises object heights above ground, and as all the pixels in the image are linked to vegetation, the total volume is estimated by multiplying the canopy height value of a raster cell by its resolution. The UAV method produced lower height values in each patch of mangrove forest compared to the TLS surveying method and its accuracy was found to be correlated with mangrove maturity. Identifying fine scale gaps in dense forest is one of the primary limitations of using UAVs to calculate aboveground biomass. This study highlighted the suitability of utilising UAVs to calculate canopy volume in forests that are not very dense.

Though carbon stock was not calculated in the Warfield and Leon (2019) study, it can theoretically be estimated from the values obtained for the canopy volume. As shown in a report published by the USDA (Woodall, 2011), biomass can be calculated using volume, density and mass relationships, as described in equation 1.

Bodw = Vgw * SGgw * W . . . (1)

where Bodw is the oven-dry biomass (lb) of wood, Vgw is the cubic volume of green wood in the central stem, SGgw is the basic specific gravity of wood (i.e. the oven-dry mass of green volume), and W is the weight of one cubic foot of water (62.4lb).

Converting this into the metric system is a trivial calculation, and the resulting value for dry biomass can then be replaced in equation (2) to calculate carbon stock.

Cp = Bodw * CF . . . (2)

where Cp is the carbon stock within a plot, Bodw is the dry biomass in the plot and CF is the species specific carbon fraction (Goslee, 2012).

In the case of mangroves, the value for carbon fraction would be in the 0.45 to 0.48 range; in a previous blogpost, we described how Bindu et al. (2018) use a factor of 0.4759 on the AGB to generate an estimate of carbon.

The second approach for using UAVs to estimate carbon is based on a study conducted by Messinger (2016) in the Peruvian Amazon. In this study, UAVs were used to create a 3D model of the forest, which was compared with data of the same forest obtained through a LiDAR survey conducted in 2009. In order to estimate carbon stocks in the forest, the authors used a formula and coefficients developed by Asner (2013) which is a method designed to estimate regional carbon stock using LiDAR data. For carbon estimation they used equation 3.

EACD = a * (TCH ^ b1) * ( BA ^ b2) * (R ^ b3) ………(3)

where EACD is estimated above ground carbon density, TCH is top of canopy height, BA is the regional average basal area, R is the regional average basal area-weighted wood density, and a, b1, b2, and b3 are coefficients estimated from the data.

Basal area is defined as the area within a plot that is occupied tree trunks and stems, and can be calculated using equation 4.

BA = 0.005454 * DBH^2……..(4)

We were unable to find a definite formula to calculate the regional average basal area-weighted wood density. The paper by Asner et al. (2013) uses coefficients based on fieldwork done by the authors and their team in Panama and does not specify the applicability of these coefficients to other forests. Since these coefficients are not specified as universal, it appears that one would have to conduct field work to calculate the variables for this formula. This, coupled with the ambiguity of measuring and calculating the regional average basal area-weighted wood density, makes this study difficult to replicate.

The calculation of the Top of Canopy Height also suffered a setback in this study. In order to derive the TCH, one has to first create a DTM (Digital Terrain Model) and CHM (Canopy Height Model) . The method used in this paper requires the use of LiDAR data in order to calculate canopy height. In their study, the GPS on the drone was not accurate enough to create a good estimation of the CHM. They thus had to combine their UAV-based Structure-from-Motion model with LiDAR data to make this estimation. They state that this barrier can be overcome by the use of higher precision GPS where error in X, Y, and Z is less than 1m, which is now possible using UAVs in conjunction with directional-GPS (D-GPS) systems, such as the DJI Phantom 4 RTK.

In conclusion, we think that technology and research has advanced to a point where it can be used to carbon stocks using UAVs, but a clear methodology for doing so is still not publicly available. There is a need to synthesize existing methods into the most effective workflow based on these studies and current technology. We believe that having a clear and accessible method that has been tested for accuracy is crucial to bridge the gap between science, policy and conservation, and we’re going to be working on this over the next few months!

References

Asner, G. & Mascaro, J. (2013). Mapping tropical forest carbon: Calibrating plot estimates to a simple LiDAR metric. Elsevier.

Bindu, G., Rajan, P., Jishnu, E. S., & Joseph, K. A. (2018). Carbon stock assessment of mangroves using remote sensing and geographic information system. The Egyptian Journal of Remote Sensing and Space Science.

Goslee, K., Walker, S., Grais, A., Murray, L., Casaraim, F., Brown, S. (2012). Module C-CS: Calculations for Estimating Carbon Stocks. Winrock International.

Messinger, M., Asner, G., Silman, M. (2016). Rapid Assessments of Amazon Forest Structure and Biomass Using Small Unmanned Aerial Systems. MDPI (Remote Sensing), 8(8), 615.

Mlambo, R., Woodhouse, I., Gerard, F., Anderson, K., (2017). Structure from Motion (SfM) Photogrammetry with Drone Data: A Low Cost Method for Monitoring Greenhouse Gas Emissions from Forests in Developing Countries. MDPI (Forests), 8, 68.

Shin, P., Sankey, T., Moore, M., & Thode, A. (2018). Estimating Forest Canopy Fuels in a Ponderosa Pine Stand. Remote Sensing, 10, 1266.

Warfield, A., Leon, J. (2019). Estimating Mangrove Forest Volume Using Terrestrial Laser Scanning and UAV-Derived Structure-from-Motion. MDPI (Drones), 3, 32.

Woodall, C., Heath, L., Domke, G., & Nichols, M. (2011). Methods and Equations for Estimating Aboveground Volume, Biomass, and Carbon for Trees in the U.S. Forest Inventory, 2010. USDA (U.S. Forest Service).